ChatGpt API 速率限制實測與產能利用率最大化

| | | 0 | |

為防止服務被濫用、伺服器過載及確保公平性,OpenAI 官方及 Azure 的 OpenAI API 都設有速率限制(Rate Limit),限定每分鐘請求數(RPM)及 Token 數量(TPM)上限。這是用 ChatGPT API 跑批次作業常要面對的問題,上回 PowerShell 整合 ChatGPT API 執行批次任務已提過呼叫間加入延遲拉長呼叫間隔的簡易解法,但最大問題是間隔該抓多久,間隔太短會超過速率上限,過長則會形成無謂等待,拉長完成時間。因此,較理想做法是依呼叫速率動態調節,設法在上限內擠出最大流量。

這篇文章將以 Azure OpenAI GPT-4o API 為例,簡單實測速率上限,並嘗試搾出最多流量充分利用產能。

借用上回文章的程式範例,我加上幾行程式碼解析回傳結果統計呼叫次數及已使用的 Token 數;超過速率上限時,系統會回傳 HTTP 429 TooManyRequests,透過 try catch System.Net.WebException 及 Exception.Response.GetResponseStream(),可取得詳細錯誤訊息。

第一個實驗先測試 RPM 上限。

$sw = [System.Diagnostics.Stopwatch]::StartNew()

$tokenCountDict = @{}

function CallComplete($prompt) {

$fullPrompt = $Global:systemPrompt + ' ' + $prompt

$payLoad = [PSCustomObject]@{

messages = @(

[PSCustomObject]@{

role = "system"

content = @(

[PSCustomObject]@{

type = "text"

text = $fullPrompt

temperature = 0.7

top_p = 0.95

max_tokens = 2048

}

)

}

)

}

$json = $payLoad | ConvertTo-Json -Depth 5

$wc = New-Object System.Net.WebClient

$wc.Headers.Add('Content-Type', 'application/json; charset=utf-8')

$wc.Headers.Add('api-key', (DecryptApiKey))

$timeStampSecs = $sw.Elapsed.TotalSeconds.ToString('0.0')

try {

$response = $wc.UploadData($Global:apiUrl, [System.Text.Encoding]::UTF8.GetBytes($json))

}

catch [System.Net.WebException] {

$response = $_.Exception.Response.GetResponseStream()

$reader = New-Object System.IO.StreamReader($response)

Write-Host $reader.ReadToEnd() -ForegroundColor Red

throw

}

$result = [System.Text.Encoding]::UTF8.GetString($response) | ConvertFrom-Json

$usage = $result.usage

$Global:tokenCount += $usage.total_tokens

$tokenCountDict[$timeStampSecs] = $usage.total_tokens

$tokenCount = 0

$reqCount = 0

$lessThan1Min = $timeStampSecs - 60

$minTimeStamp = [int]::MaxValue

$tokenCountDict.GetEnumerator() | ForEach-Object {

if ($_.Key -ge $lessThan1Min) {

$tokenCount += $_.Value

$reqCount++

if ($_.Key -lt $minTimeStamp) {

$minTimeStamp = $_.Key

}

}

else {

$tokenCountDict.Remove($_.Key)

}

}

Write-Host "$($timeStampSecs)s $($usage.total_tokens)($($usage.prompt_tokens) + $($usage.completion_tokens)) tokens | $tokenCount TPM | $reqCount RPM | ($minTimeStamp ~ $timeStampSecs)" -ForegroundColor Magenta

return $result.choices[0].message.content

}

SetSystemPrompt '請提供下列人名英文音譯:'

[string[]] $names = "張飛、趙雲、黃忠、魏延、馬超、孔明、劉備、關羽、曹操、孫權、周瑜、孫策、呂布、袁紹、劉表、劉璋、張角、董卓、貂蟬、王允".Split("、")

while ($true) {

$names | ForEach-Object {

$res = CallComplete $_

Write-Host $res.Substring(0, [Math]::Min($res.Length, 32)) '...'

}

}

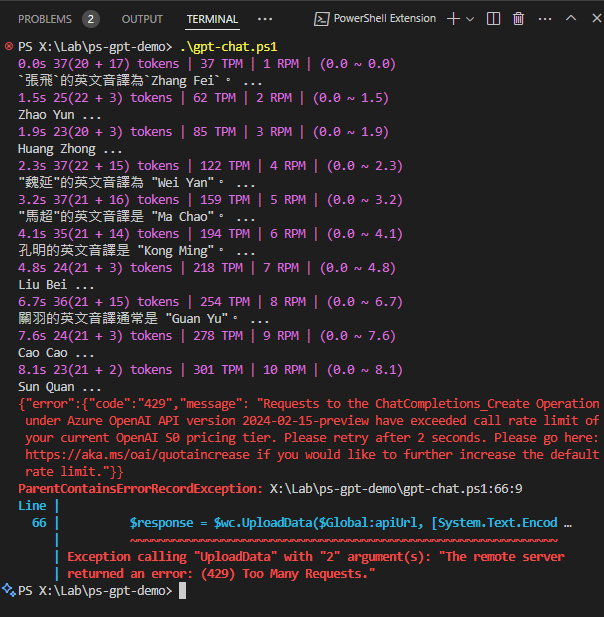

我設計了一個簡單的三國人名翻譯任務,輕鬆在 5 秒內集滿 10 次呼叫( Token 數不多,約三百出頭),接著第 11 次便發生 HTTP 429,告知需等待 2 秒及 5 秒再試:

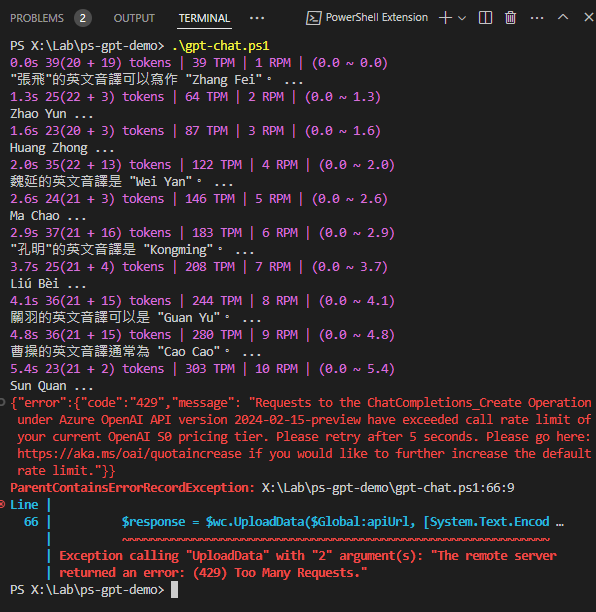

試著加入 Start-Sleep -Seconds 1 放緩呼叫速度,使 10 次呼叫超過十秒,這回在 33s 累積 15 RPM 後才出現 HTTP 429,要求等待 26 秒再試:

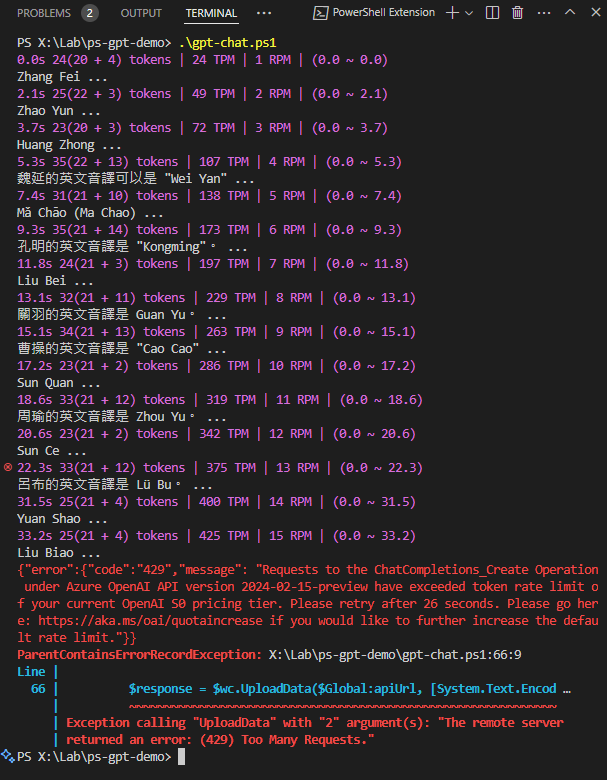

第二個實驗改做長篇文章總結,挑戰 TPM 上限。前後試了兩次(中間有間隔 60 秒以上),分別在 8s 4532 / 5s 4543 TPM 時撞上 HTTP 429,要求等待 52s 及 55s 後再試。

由上述測試結果,推測 TPM 上限是以分鐘為單位,上限約在 5K 左右,而超過上限等待時間可用 60 - 連續呼叫秒數推算(60-8 = 52, 60-5 = 55)。RPM 上限的統計方式感覺較複雜,至少已有十秒內累積 10 次或 30 秒內累積 15 次兩種狀況,前者要求等待 2 或 5 秒,依據不明;後者倒可用 60 - 連續呼叫秒數推算(60-33 接近 26)。

註:速率上限與訂閱身分有關,企業帳號的上限應會比 MSDN 訂閱高一些。參考

綜合上述觀察,若同時有其他服務也在使用 API,很難藉由統計呼叫量掌握總體剩餘額度,依據系統回傳 HTTP 429 指定的等待時間暫停呼叫是較可行的做法。故將程式設計成「遇回傳 HTTP 429 錯誤時,先暫停指定等待秒數再重試」,理論上可達到速率限制內的最大流量。

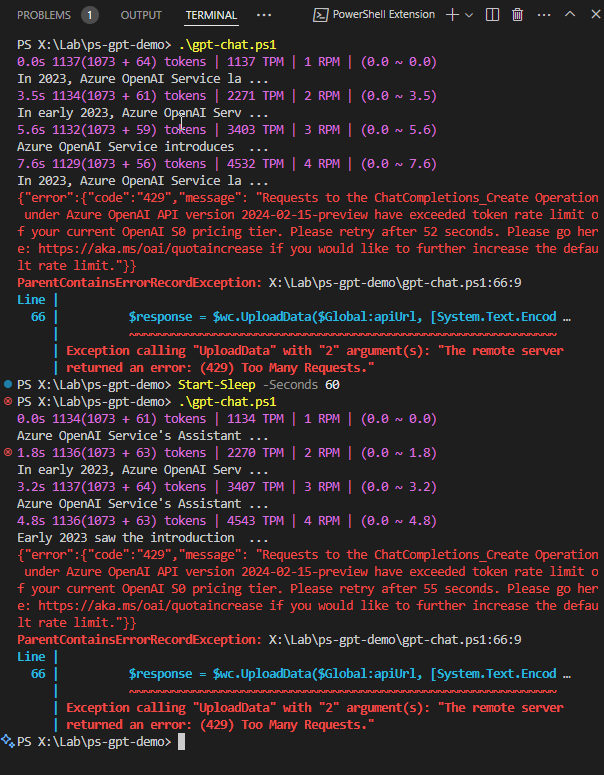

我簡單試做了一個版本,實測效果如下:

完整程式範例如下,原理很簡單,catch System.Net.WebException 判斷是否狀態為 429,若是則進一步用 Regex 識別 retry after (?<s>\d+) second 指定秒數,用 Start-Sleep 等待足夠時間後再新發送請求,如此便可貼著速率上限執行批次作業,達到最大產能。

param ([string]$question)

$ErrorActionPreference = "Stop"

$settingsPath = '.\azure-openai.settings'

function ReadApiSettings() {

try {

if (Test-Path $settingsPath) {

$settings = Get-Content $settingsPath | ConvertFrom-Json

$apiUrl = $settings.apiUrl

$apiKey = $settings.apiKey

}

}

catch { }

if ([string]::IsNullOrEmpty($apiUrl) -or [string]::IsNullOrEmpty($apiKey)) {

Write-Host "Please set Azure OpenAI url and key" -ForegroundColor Yellow

if ([string]::IsNullOrEmpty($apiUrl)) {

Write-Host " ex: https://<host-name>.openai.azure.com/openai/deployments/<deploy-name>/chat/completions?api-version=2024-02-15-preview"

Write-Host "API Url: " -ForegroundColor Cyan

$apiUrl = Read-Host

}

if ([string]::IsNullOrEmpty($apiKey)) {

Write-Host "API Key: " -ForegroundColor Cyan

$apiKey = Read-Host -AsSecureString | ConvertFrom-SecureString

}

@{

apiUrl = $apiUrl

apiKey = $apiKey

} | ConvertTo-Json | Set-Content -Path $settingsPath

}

$Global:apiUrl = $apiUrl

$Global:apiKey = $apiKey

}

ReadApiSettings

$Global:systemPrompt = 'You are an AI assistant that helps people find information.'

function SetSystemPrompt($prompt) { $Global:systemPrompt = $prompt }

function DecryptApiKey() {

$secStr = $Global:apiKey | ConvertTo-SecureString

$BSTR = [System.Runtime.InteropServices.Marshal]::SecureStringToBSTR($secStr)

return [System.Runtime.InteropServices.Marshal]::PtrToStringAuto($BSTR)

}

$sw = [System.Diagnostics.Stopwatch]::StartNew()

$tokenCountDict = @{}

function CallComplete($prompt) {

$fullPrompt = $Global:systemPrompt + ' ' + $prompt

$payLoad = [PSCustomObject]@{

messages = @(

[PSCustomObject]@{

role = "system"

content = @(

[PSCustomObject]@{

type = "text"

text = $fullPrompt

temperature = 0.7

top_p = 0.95

max_tokens = 2048

}

)

}

)

}

$json = $payLoad | ConvertTo-Json -Depth 5

$wc = New-Object System.Net.WebClient

$wc.Headers.Add('Content-Type', 'application/json; charset=utf-8')

$wc.Headers.Add('api-key', (DecryptApiKey))

$timeStampSecs = $sw.Elapsed.TotalSeconds.ToString('0.0')

$retry = 5

while ($true) {

try {

$response = $wc.UploadData($Global:apiUrl, [System.Text.Encoding]::UTF8.GetBytes($json))

break

}

catch [System.Net.WebException] {

$response = $_.Exception.Response.GetResponseStream()

$reader = New-Object System.IO.StreamReader($response)

$respText = $reader.ReadToEnd()

$result = $respText | ConvertFrom-Json

if ($result.error.code -eq '429') {

$msg = $result.error.message

$retryAfter = [regex]::Match($msg, 'retry after (?<s>\d+) second')

if ($retryAfter.Success) {

$secs = [int]::Parse($retryAfter.Groups['s'].Value) + 1

Write-Host "Token rate limit exceeded, wait for $secs seconds." -ForegroundColor Cyan

while ($secs -gt 0) {

Write-Host "`r * $secs seconds to retry..." -ForegroundColor Green -NoNewline

Start-Sleep -Seconds 1

$secs--

}

Write-Host "`r `r" -NoNewline

$retry--

continue

}

else {

Write-Host $msg -ForegroundColor Red

}

}

throw

}

}

if ($retry -le 0) {

throw "Retry limit exceeded"

}

$result = [System.Text.Encoding]::UTF8.GetString($response) | ConvertFrom-Json

$usage = $result.usage

$Global:tokenCount += $usage.total_tokens

$tokenCountDict[$timeStampSecs] = $usage.total_tokens

$tokenCount = 0

$reqCount = 0

$lessThan1Min = $timeStampSecs - 60

$minTimeStamp = [int]::MaxValue

$tokenCountDict.GetEnumerator() | ForEach-Object {

if ($_.Key -ge $lessThan1Min) {

$tokenCount += $_.Value

$reqCount++

if ($_.Key -lt $minTimeStamp) {

$minTimeStamp = $_.Key

}

}

else {

$tokenCountDict.Remove($_.Key)

}

}

Write-Host "$($timeStampSecs)s $($usage.total_tokens)($($usage.prompt_tokens) + $($usage.completion_tokens)) tokens | $tokenCount TPM | $reqCount RPM | ($minTimeStamp ~ $timeStampSecs)" -ForegroundColor Magenta

return $result.choices[0].message.content

}

SetSystemPrompt '請提供下列人名英文音譯:'

[string[]] $names = "張飛、趙雲、黃忠、魏延、馬超、孔明、劉備、關羽、曹操、孫權、周瑜、孫策、呂布、袁紹、劉表、劉璋、張角、董卓、貂蟬、王允".Split("、")

while ($true) {

$names | ForEach-Object {

$res = CallComplete $_

Write-Host $res.Substring(0, [Math]::Min($res.Length, 32)) '...'

}

}

This blog post addresses the issue of rate limits (RPM and TPM) set by OpenAI and Azure OpenAI API to prevent service abuse. The author provides a PowerShell script to dynamically adjust the calling rate to maximize throughput while avoiding HTTP 429 errors.

Comments

Be the first to post a comment